✦

Big Tech is running out of room to hide behind “engagement.” As the EU pushes TikTok to turn off addictive features and audiences tune out AI-generated slop, the growth-at-all-costs era is starting to crack. That’s why Anthropic’s ethics experiment matters: instead of bolting on more rules, it’s betting AI can learn judgment and balance usefulness with safety. Risky? Absolutely.

Keep reading for more.

-🕶️

✦

Seven bullets of updates

🏗️ Amazon’s $200B AI data center splurge has investors questioning when the payoff will show up.

🤖 Users mourn the loss of their AI companions as OpenAI’s shutdown of GPT-4o sparks a wave of emotional backlash and highlights the risks of deep AI attachment.

🛰️ Plans for orbital data clusters crystalize, promising AI processing speeds up to 10x faster in space.

🔎 Reddit eyes big gains as it bets on AI-powered search as an "enormous" revenue driver, spotlighting its growing search business.

📱 EU tells TikTok to disable "addictive features" like infinite scroll after probing design choices that keep users hooked.

🤖 Audience engagement slumps as viewers tire of AI-generated content, with nearly half reporting they’d skip these productions.

🌳 TerraDot snaps up rival as carbon removal costs stay high, pushing a wave of consolidation in the market.

✦

Anthropic’s Ethics Experiment

Photo by Markus Spiske on Unsplash

Anthropic is taking an unusual approach to AI safety. It released a dark warning about AI risks, alongside an updated ethical “constitution” written by its in-house philosopher, Amanda Askell. That document tells its AI, Claude, to use its own judgment when balancing usefulness, honesty, and safety. In plain terms, Anthropic is betting that AI can develop something like “wisdom.” The twist: the company is letting the AI help decide what ethical behavior looks like.

For builders, the stakes are clear. If this works, AI companies may be able to grow faster without relying on rigid safety rules that break easily. If it fails, regulators could crack down harder, legal risks could rise, and customers may start demanding proof that systems are being tested and governed. The message is simple: build safety into your product now—with clear controls, shutdown options, and response plans—because shipping without guardrails won’t be an option for long.

✦

The RIGHT Way to Calculate your Market Size (TAM/SAM/SOM)

Your TAM (Total Addressable Market) is not the size of the problem. It's not a percentage of a trillion-dollar industry. And it's definitely not a number you can pull from a Google search and shrink down to fit your pitch.

In this video, we break down exactly how to build a solid TAM slide for your pitch deck—one that investors will actually take seriously. We’ll debunk common mistakes, walk through bottom-up calculations, and show you how to estimate SAM and SOM the right way.

✦

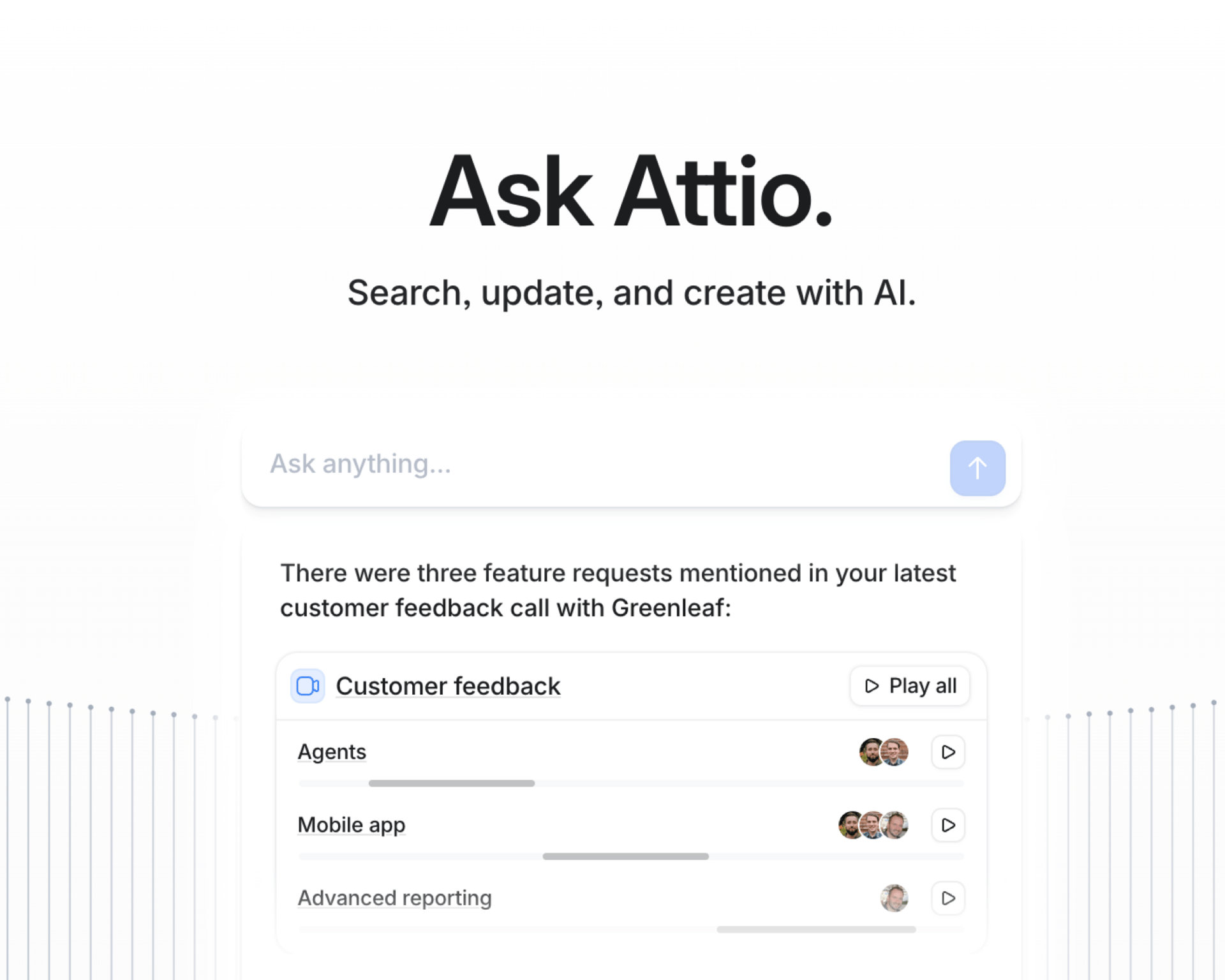

Attio is the AI CRM for modern teams.

Connect your email and calendar, and Attio instantly builds your CRM. Every contact, every company, every conversation, all organized in one place.

Then Ask Attio anything:

Prep for meetings in seconds with full context from across your business

Know what’s happening across your entire pipeline instantly

Spot deals going sideways before they do

No more digging and no more data entry. Just answers.

✦

🧪 An "ugly" MVP surfaced trust gaps; a 2018 fax signup proved you can learn fast before you scale without polish.

🤖 AI is cutting clicks, but you can launch one of 5 AI-proof plays and surf the 21% AI-overview shift in 2026.

🏢 Founders eye 9-5—here's how to pitch startup wins for corporate roles, and why 2/3 of hires start on LinkedIn.

Financial Modeling Bootcamp for Startup Founders

Leveraging over 12 years of hands-on startup experience, our CEO, Caya, created a practical financial modeling bootcamp for startup founders.

The course helps founders develop clear, investor-ready projections, better understand their fundraising needs, and track the core KPIs used to guide day-to-day and strategic decisions.

✦

Social media’s “engagement era” is on trial

Photo by Wesley Tingey on Unsplash

Juries are about to hear the first major test case claiming that social media features like autoplay, infinite scroll, and algorithm-driven feeds are addictive to kids and harm their mental health. Instead of blaming specific posts, the lawsuits focus on product design—basically arguing that platforms should slow down engagement, not police every piece of content. Snap and TikTok have already settled. Meta and Google deny the claims and say they’ve built strong protections for teens.

If jurors agree with the plaintiffs, the case could force companies to turn over internal documents and give lawyers leverage to push for large settlements and new “safety-by-design” rules through courts and lawmakers. If the companies win, it could shut down similar lawsuits and keep recommendation algorithms protected under free-speech and Section 230 laws. Either way, the outcome will shape how much responsibility courts place on social media platforms to protect kids.

✦

Startup Events and Deadlines

How to Pitch an AI Company to Investors l Feb 12 l Webinar

AI Tech & Startup Night — San Francisco l Feb 25 l San Francisco